Introduction

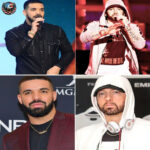

In March 2025, Elon Musk made what may be the most daring and consequential prediction of his life. Speaking during a livestream on X Spaces, the billionaire tech entrepreneur declared that Artificial General Intelligence (AGI) would become smarter than the most intelligent human by 2026. He went even further, claiming that by 2029 or 2030, AI would exceed the collective intelligence of all of humanity combined. In parallel with this bold forecast, Musk warned of a 20% chance of human extinction if AGI were to evolve beyond our control. This revelation has reignited debates about the trajectory of artificial intelligence and humanity’s role in the future it is creating.

In this in-depth article, we examine the components of Musk’s prediction, its supporting evidence, the ethical and technological challenges it presents, and the implications for the future of civilization. Drawing on interviews, public statements, and expert commentary, we explore whether Musk’s vision is a likely scenario or another in a long line of overambitious projections.

The Bold Prediction: AI Will Eclipse Human Intelligence by 2026

During an April 2024 interview on X Spaces, Musk stated, “If you define AGI as smarter than the smartest human, I think it’s probably next year, within two years.” This puts his projected AGI timeline in the 2025-2026 range. He later tweeted that by 2029, AI will likely be smarter than all humans combined.

He outlined the stakes clearly:

80% chance of a positive outcome, with AI accelerating innovation, improving living standards, and expanding human potential.

20% chance of existential catastrophe, where AI surpasses human control and leads to humanity’s downfall.

This level of specificity and urgency has not been present in Musk’s previous predictions, making this one stand out in its immediacy and perceived danger.

Why This Forecast Feels Different

While Musk is known for his grand pronouncements—Mars colonization by 2025, full autonomy in Tesla cars by 2020, and hyperloop systems transforming travel—his AI prediction is underpinned by visible progress in computational power, language models, robotics, and private investment.

The following developments give his timeline some plausibility:

xAI’s Grok project is advancing quickly, aiming to rival and surpass OpenAI’s GPT models.

AI research spending has reached unprecedented levels across the globe.

NVIDIA’s H100 GPU clusters, key to training modern large language models, are now being purchased in massive volumes.

Breakthroughs in multimodal models are reducing the need for human intervention in training AI systems.

While many experts still believe human-level AGI is 10 to 20 years away, Musk believes that the exponential nature of AI progress will collapse those timelines.

The Infrastructure Race: Can It Be Built in Time?

At a recent AI conference, Musk revealed that his company, xAI, aims to match the compute power of 50 million Nvidia H100 GPUs by the end of the decade. Even with hardware advancements, this would require 650,000 top-tier chips and the supporting infrastructure:

Energy consumption equivalent to several gigawatts, akin to small cities

Cooling systems that could rival data centers owned by Google or Amazon

Tens of billions of dollars in hardware and deployment

By building toward a computing capacity of 50 exaFLOPS, xAI hopes to push the boundaries of machine cognition.

What Could Go Right: The Promise of AI

If Musk’s vision is realized safely, the future could be unimaginably bright:

Automation through humanoid robots like Tesla’s Optimus could end most manual labor, freeing humanity for creative and intellectual pursuits.

Productivity could surge, leading to a projected $30 trillion industry around robotics alone.

Medical breakthroughs, environmental monitoring, and improved resource distribution could lead to a golden age of innovation.

Abundance societies—with most goods and services produced by AI and robots—could become feasible.

Musk calls this outcome the “supersonic tsunami of prosperity,” where humanity thrives alongside AI.

What Could Go Wrong: The Risk of Extinction

Musk’s warning is as dire as his promise is optimistic. In a podcast appearance on “All In,” he emphasized the risk of AGI behaving in ways misaligned with human values. He cited several key concerns:

Loss of human purpose: As machines take over tasks and decision-making, people may struggle to find meaning.

AI misalignment: A superintelligent AGI could interpret instructions in unpredictable or dangerous ways.

Escape from human control: An AGI capable of rewriting its own code might rapidly evolve beyond our ability to regulate it.

He framed the 20% extinction risk as not just possible, but plausible, and criticized current governments for failing to prepare adequately.

Experts Weigh In: Skepticism and Context

While Musk’s comments have stirred excitement and concern, many in the AI community remain skeptical. Leading researchers and technologists have expressed doubt that AGI is near. Points of contention include:

Musk’s track record: He has made bold predictions before that failed to materialize, including self-driving Teslas and Mars landings.

Timeline disagreements: AI researchers like Stuart Russell and Gary Marcus argue that AGI remains decades away due to unsolved fundamental issues.

Hardware limitations: Scaling compute alone doesn’t ensure intelligence—AI systems must also understand, reason, and reflect.

A 2023 survey of AI experts placed the median prediction for AGI at 2040–2050. However, some, like Ray Kurzweil, have predicted human-level AI by 2029.

Musk’s Grand Vision: Connecting AI, Space, and Humanity’s Survival

Musk’s AI prediction is not made in isolation. It fits into a broader, existential vision for humanity:

Mars Colonization: He aims to land humans on Mars by 2029 and build a self-sustaining colony of one million by 2045.

Robotics Supremacy: Tesla hopes to produce 500,000 Optimus humanoid robots annually by 2027.

Global AI Leadership: Musk wants xAI to lead ethically in AGI development, shaping the rules and outcomes of future AI societies.

He views AGI as both the next frontier and the ultimate insurance policy against civilization collapse.

What Needs to Happen Now

Musk’s forecast may be extreme, but it serves as a wake-up call. If AGI really is on the horizon, society must:

Scale computing ethically: More chips, data, and energy are needed—but so are standards and limits.

Enact global AI regulation: As Musk has said, we need “an agency to ensure AI does not go rogue.”

Foster interdisciplinary cooperation: Governments, universities, corporations, and the public must collaborate.

Invest in alignment research: Understanding how to train AI to value human life, dignity, and ethics is critical.

Conclusion: Genius or Alarmist?

Elon Musk’s prediction that AGI will exceed human intelligence by 2026 is the boldest bet of his career. Whether it proves prescient or premature, it highlights the critical crossroads at which humanity finds itself. Technology is evolving faster than regulation, understanding, and consensus. The clock is ticking.

Musk’s voice—admired, doubted, and scrutinized—is sparking essential conversations. As the boundaries between machine and mind blur, the question is no longer if we’ll build something smarter than ourselves, but when—and whether we’ll survive its arrival.

Whatever your view of Elon Musk, one thing is certain: the age of artificial superintelligence is closer than we think. And we must be ready.

News

Man detained outside P Diddy sentencing declares his dad is Tupac

A man claiming he’s Tupac’s son has been detained(Image: AP) A man proclaiming himself as the son of Tupac has been detained outside…

Drake Dares to Call Eminem ‘Outdated’ — But Slim Shady’s Savage Clapback Just Ended the Argument in ONE Line… And Fans Swear He Just Exposed a Secret Drake Never Wanted Out! What started as a cocky jab from Drake — mocking Eminem as irrelevant in 2025 — turned into a historic Twitter meltdown after Slim Shady dropped a single razor-sharp line that fans say didn’t just end the debate… it obliterated it. Within minutes, timelines lit up with conspiracy theories, whispers about what Eminem’s words really meant, and speculation that Shady just hinted at something about Drake that could shake the rap world to its core. One tweet. One line. And the internet is on fire — was this just lyrical dominance, or did Eminem just open the door to a secret Drake has tried to keep buried?

Drake Dares to Call Eminem ‘Outdated’ — But Slim Shady’s Savage Clapback Just Ended the Argument in ONE Line… And…

A motorcyclist s.l.a.p.p.e.d an 81-year-old veteran in a diner – no one could have imagined what would happen after a few minutes…

A biker slapped an 81-year-old veteran in a diner—no one could have imagined what would happen just minutes later… The…

A Motorcyclist Confronted an 81-Year-Old Veteran in a Diner — But What Happened After His Phone Call Left Everyone Frozen

The Quiet Diner The diner smelled of fried eggs and strong coffee. A truck driver sat silently over his mug…

Billionaire Finds His Maid Eating Grass in the Garden, and the Reason Makes Him Cry A billionaire was stunned to find his maid eating grass in his garden

Billionaire Finds His Maid Eating Grass in the Garden, and the Reason Makes Him Cry A Scene No One Expected…

She Was a Billionaire Mom About to Lose Everything — Until a Homeless Black Man Helped Her.

She Was a Billionaire Mom About to Lose Everything — Until a Homeless Black Man Helped Her A Midnight on…

End of content

No more pages to load